Table of Contents

As AI continues to reshape industries, forming new business tech trends. Two prominent models are making significant strides in the large language model (LLM) landscape—Meta’s Llama and Mistral. These models offer cutting-edge capabilities in natural language processing (NLP), content generation, and AI-powered applications. But when it comes to choosing between Llama vs. Mistral, which model performs better, and why?

In this comprehensive blog, we’ll break down the strengths and weaknesses of Llama and Mistral, exploring how they work, their unique features, and the performance comparisons between the two. By the end, you’ll have a clearer understanding of which model best suits your needs.

Meta Llama

Llama (Large Language Model Meta AI) is a powerful AI model developed by Meta (formerly Facebook). First introduced in 2022, Llama was created to offer businesses and developers a highly efficient, scalable, and customizable AI tool. Its primary goal was to provide an open-source alternative to AI tools like ChatGPT, specifically GPT-3 model, allowing users to fine-tune and adapt the model for specific tasks while maintaining high performance.

Meta Llama quickly gained popularity for its ability to balance scalability and efficiency, offering both small-scale and large-scale AI solutions. The model became even more competitive with the release of Llama 2 and Llama 3, which introduced significant improvements in performance, contextual understanding, and ethical AI development.

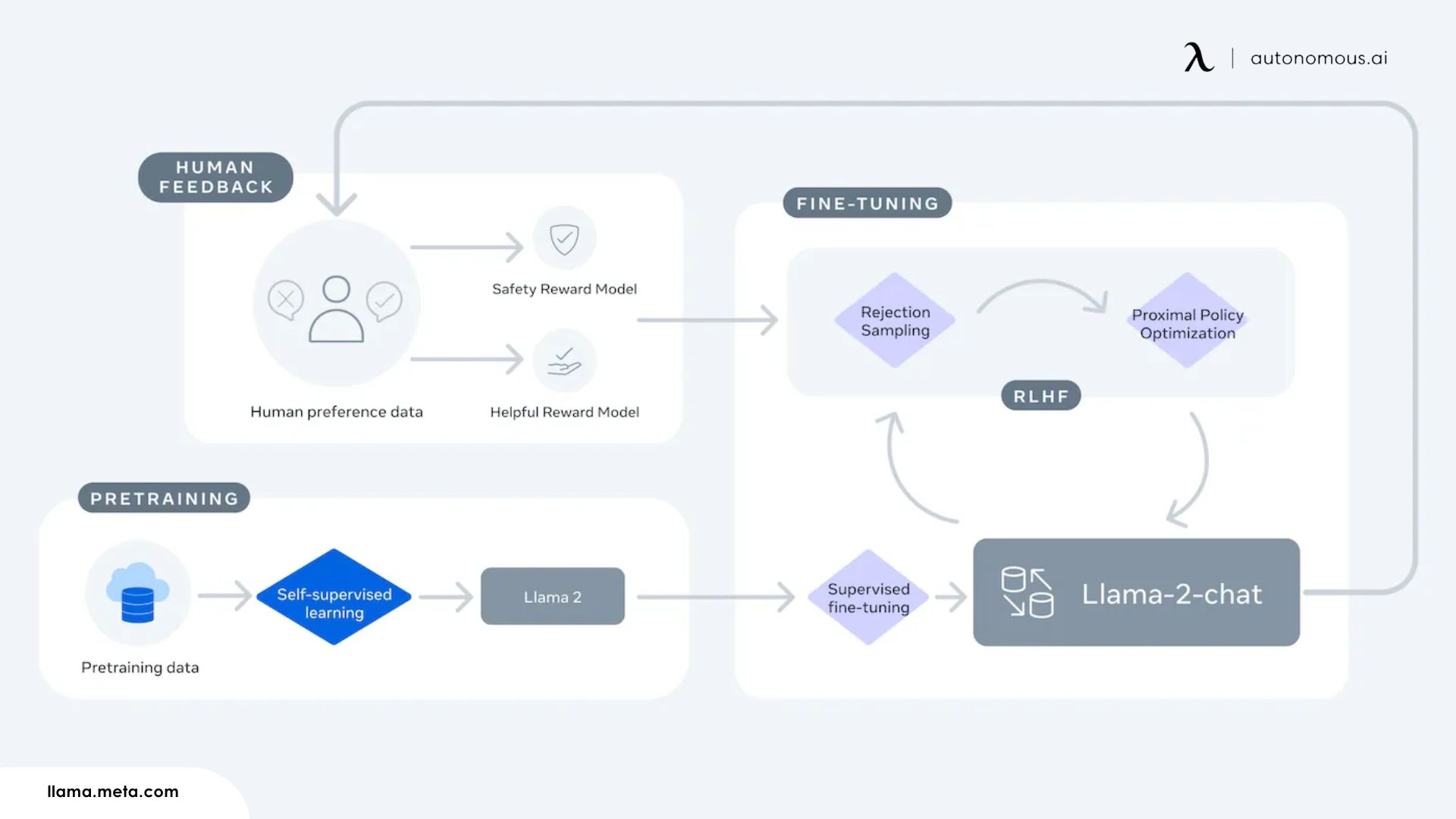

How Does Llama Work?

Llama operates on a transformer-based architecture, which is designed to handle sequential data processing, making it ideal for text generation and NLP tasks. The transformer model works by breaking down input data into tokens, which are then processed using self-attention mechanisms to understand the relationships between words in a sequence. This enables Llama to generate coherent, contextually relevant responses to a wide range of prompts.

Llama’s flexibility lies in its ability to be fine-tuned for specific tasks. Developers can take the pre-trained Llama model and customize it using smaller, task-specific datasets, making it a versatile option for businesses looking for tailored AI solutions.

Key Features of Llama

- Open-source: One of Llama’s biggest advantages is its open-source availability, making it accessible for developers and businesses to customize as needed.

- Scalability: Llama can be scaled up for large enterprise applications or down for smaller tasks, depending on the specific use case.

- Efficient Architecture: Designed to be more efficient than some larger models, Llama balances high performance with lower computational costs.

- Customizability: Developers can fine-tune Llama for specific applications, giving them more control over how the AI behaves.

Meta Llama 2 and 3

Meta introduced Llama 2 in 2023, followed by Llama 3 in 2024, both of which introduced improvements in accuracy, speed, and ethical considerations.

Llama 2 built on the foundations of the original model, offering more advanced natural language understanding and better efficiency. Llama 3 further refined the model, improving its ability to handle multi-turn conversations and complex queries while incorporating better bias mitigation and ethical safeguards.

With these updates, Llama remains one of the top AI models for businesses looking to develop custom AI tools without the limitations of closed-source models.

Mistral: Frontier AI in Your Hands

Mistral is a relatively new entrant in the large language model space. It is a cutting-edge generative AI designed for developers and businesses looking for open, portable, and highly efficient AI models. With a focus on open-weight models, Mistral allows users to customize, deploy, and fine-tune AI systems according to their needs.

Mistral's flagship models—Mistral Large and Mistral Nemo—are available under flexible licenses, including Apache 2.0 for unrestricted use, making them accessible for a broad range of applications. Mistral emphasizes transparency, portability, and speed, aiming to provide powerful AI technology that’s independent of cloud providers, ensuring greater flexibility for businesses.

One of the standout models in their lineup is the Mistral 7B, a state-of-the-art AI with 7.3 billion parameters, designed to outperform larger models like LLaMA2-13B and LLaMA1-34B, but with significantly lower computational overhead. Mistral models are portable and can be deployed on public cloud services, through serverless APIs, or in virtual private cloud (VPC) and on-premise environments.

How Does Mistral Work?

Mistral models are built on transformer architecture with a focus on parameter efficiency, which enables them to deliver high performance using fewer resources compared to larger models. One of the core innovations in Mistral’s design is its use of Grouped Query Attention (GQA) and Sliding Window Attention (SWA), which boost inference speed and allow the model to handle longer sequences more effectively. These technologies make Mistral models ideal for real-time applications that require fast, efficient processing, such as chatbots, virtual assistants, and code generation.

The Mistral 7B model, for instance, has garnered attention for its ability to deliver high performance in tasks like natural language understanding, text generation, and even coding tasks, competing directly with CodeLlama-7B. Its compact size (13.4 GB) allows it to run efficiently on standard machines, giving developers access to powerful AI without the need for expensive, resource-intensive infrastructure.

Key Features of Mistral

Open-Weight Models

Mistral releases models with open weights, allowing users to download and deploy them on their own infrastructure. This openness promotes transparency and decentralization in AI development.

Mistral Nemo is available under the Apache 2.0 license, while Mistral Large is accessible through a free non-commercial license or a commercial license for enterprise use.

Portability

Mistral models are built to be deployed across various platforms, including Azure AI, Amazon Bedrock, and on-premise environments, providing flexibility for businesses. The independence from specific cloud providers ensures that users can deploy Mistral in their preferred environments without vendor lock-in.

Performance and Speed

Mistral models, particularly the Mistral 7B, have been shown to outperform larger models like LLaMA2-13B and LLaMA1-34B in various benchmarks, including commonsense reasoning, reading comprehension, and code-related tasks. This performance is achieved while maintaining a lower computational footprint, making Mistral highly efficient.

Grouped Query Attention (GQA) and Sliding Window Attention (SWA) help Mistral models process longer sequences faster, enhancing their performance in real-time applications.

Customization

Mistral models are fully customizable, allowing developers to fine-tune the models for specific business needs. This customization enables companies to create differentiated AI applications that are tailored to their unique workflows and requirements.

Efficiency

Despite having only 7.3 billion parameters, Mistral 7B competes with much larger models, making it a more cost-effective AI tool for coding. It's perfect for coders looking for top-tier performance without the burden of resource-intensive deployments.

Comparison of Llama vs. Mistral

Mistral and LLaMA AI share some common goals, such as delivering powerful, efficient AI models to developers and businesses, but they also differ in terms of architecture, customization, and performance focus. Here’s how Mistral vs. Llama compare:

1. Performance

Designed as a versatile, general-purpose language model, LLaMA excels in NLP tasks such as content generation, translation, and chatbot development. Its open-source architecture allows developers to fine-tune and scale the model for a wide variety of applications. LLaMA2 and LLaMA3 offer strong performance, particularly in complex multi-turn conversations and deep text analysis.

The Mistral 7B model outperforms LLaMA2-13B in several key metrics, including commonsense reasoning, math tasks, and reading comprehension, despite having significantly fewer parameters. Mistral’s Grouped Query Attention (GQA) and Sliding Window Attention (SWA) technologies contribute to faster inference and greater efficiency, making it better suited for real-time applications where speed and resource efficiency are critical.

2. Customization and Flexibility

LLaMA AI is highly customizable, particularly because it’s open-source. Developers can fine-tune the model for their specific needs, and the flexibility of the LLaMA framework makes it ideal for a wide range of applications. Whether for research or enterprise, LLaMA’s ability to adapt is one of its strongest features.

While Mistral offers customization options through its open-weight models, it’s designed to be more streamlined and efficient. Mistral models can be modified and fine-tuned, but they are particularly focused on performance optimization rather than extensive customizability. However, Mistral’s portability—with options to deploy across public clouds, serverless APIs, or on-premise environments—gives it an edge for businesses that need flexibility in deployment..

3. Scalability

LLaMA offers excellent scalability, particularly with larger models like LLaMA 70B. It can be scaled up for enterprise-level applications or scaled down for smaller tasks, making it versatile for different business needs.

Mistral excels in terms of efficiency and scalability for real-time applications and mid-size projects. With its superior performance at a smaller size, Mistral 7B can handle tasks that typically require larger models, making it a cost-effective solution. However, for very large-scale deployments, LLaMA may offer more scalability.

4. Resource Efficiency

In terms of resource efficiency, Mistral 7B is designed to be incredibly resource-efficient. Despite its smaller size, it outperforms models like LLaMA2-13B and even LLaMA1-34B in various benchmarks. This makes Mistral an attractive choice for businesses looking to deploy powerful AI without the need for heavy computational resources.

LLaMA is relatively efficient compared to traditional models like GPT-3, but it still requires significant resources for larger-scale models like LLaMA 70B. Businesses must invest in robust hardware or cloud infrastructure to fully leverage LLaMA’s capabilities.

5. Applications

LLaMA’s broad versatility makes it suitable for a wide range of applications, including NLP, content creation, code generation, and chatbots. Its scalability ensures that it can handle both small and large projects effectively:

- Enterprise-level applications

- Custom AI tools

- Customer service automation

- Content creation (Check out the best AI tools for writing for more insights)

Mistral is particularly suited for real-time applications that require fast inference and high performance. With its efficient design and speed-optimized architecture, it excels in areas like coding tasks, chatbot deployment, and instructional AI. Additionally, its compact size allows it to be deployed on standard machines without significant resource overhead.

6. Deployment Options

LLaMA AI can be deployed across various environments but requires robust infrastructure, especially for larger models like LLaMA 70B.

Mistral offers superior portability, allowing businesses to deploy the models in a serverless environment, on public cloud services like Azure and AWS, or in on-premise environments. This independence from cloud providers gives Mistral users flexibility and control over their AI deployments.

Llama vs. Mistral – Which is Better?

The choice between Mistral and LLaMA AI depends on your specific needs:

- Mistral is the better choice if you prioritize efficiency, speed, and resource-friendly deployment. Its Mistral 7B model offers superior performance in key benchmarks despite its smaller size, making it ideal for real-time applications, small- to mid-scale projects, and businesses that need powerful AI without the high computational costs.

- LLaMA AI is a more versatile and scalable option for developers and businesses looking for a customizable, general-purpose AI model. Its open-source nature and wide range of model sizes allow it to be adapted to virtually any AI task, from NLP to large-scale enterprise solutions.

Ultimately, the right choice depends on whether your focus is on speed and efficiency (Mistral) or customizability and scalability (LLaMA).

- For businesses and developers looking for resource-efficient, real-time AI applications with a focus on fast deployment and performance, Mistral provides a highly compelling solution. The Mistral 7B model stands out for its impressive performance relative to its size, making it particularly attractive for companies with limited computational infrastructure but high-performance needs.

- For organizations and researchers that require a more scalable, open-source, and highly customizable AI model that can handle a wide variety of NLP tasks, LLaMA AI offers a better solution. Its ability to be fine-tuned and adapted for large-scale tasks, combined with its wide range of model sizes (from LLaMA 7B to LLaMA 70B), makes it versatile for businesses that need an adaptable AI framework for extensive applications.

Both Mistral and LLaMA are leading-edge AI technologies, and the choice between them depends on the specific requirements of your projects, such as deployment preferences, resource availability, and the scale of your AI needs. Mistral’s focus on portability and speed makes it a powerful tool for developers needing fast, efficient solutions, while LLaMA’s strength lies in its scalability and broad applicability in research and enterprise environments.

For more insights into AI tools and large language models, explore the best AI tools and AI language models to determine which AI solutions best suit your workflow.

Get exclusive rewards

for your first Autonomous blog subscription.

Spread the word

You May Also Like

-7512dd9e-3510-42ed-92df-b8d735ea14ce.svg)