Qwen2-VL: Revolutionizing Multimodal AI with Vision-Language Excellence

Table of Contents

The AI landscape continues to evolve, with vision-language models (VLMs) paving the way for more intelligent and versatile systems. Among the trailblazers is Qwen2-VL, an advanced multimodal AI developed by Alibaba Group. With groundbreaking features like dynamic resolution processing, extended context handling, and multilingual support, Qwen2-VL is setting a new benchmark in the field.

In this blog, we explore how Qwen2-VL revolutionizes multimodal AI, how it compares to competitors, its real-world applications, and the integration of AnonAI with Qwen2.5 Agent, showcasing the practical applications of these innovations.

1. How Qwen2-VL is Revolutionizing Multimodal AI

Multimodal AI enables systems to process and integrate diverse types of data—text, images, and more. Qwen2-VL brings transformative advancements to this domain, providing efficiency, flexibility, and functionality across a variety of tasks.

1.1. Dynamic Resolution Processing

One of Qwen2-VL’s standout features is its Naive Dynamic Resolution, which allows it to process images of varying resolutions by dynamically adjusting visual tokens. This innovation enhances computational efficiency and improves the accuracy of tasks such as:

- Object Detection: Identifying objects with precision, regardless of image quality.

- Pattern Recognition: Analyzing complex visual patterns for detailed insights.

- Seamless Integration: Adapting visuals for better alignment with textual data.

For instance, an e-commerce platform could use Qwen2-VL to identify and recommend products based on user-uploaded photos, even if the images vary in quality or size.

1.2. Multimodal Rotary Position Embedding (M-RoPE)

Qwen2-VL uses M-RoPE, a cutting-edge technique that aligns positional data across text, images, and videos. This ensures seamless integration of multiple data types for tasks like:

- Generating detailed descriptions for images.

- Answering complex visual questions.

- Creating narratives that incorporate both visual and textual elements.

With M-RoPE, Qwen2-VL ensures that multimodal interactions are not only accurate but also contextually coherent, enabling applications such as automated storytelling or visual explanation tools.

1.3. Long-Context Support for Extended Tasks

Unlike traditional models with limited token capacities, Qwen2-VL supports up to 128,000 tokens, enabling:

- Processing large documents with embedded visuals.

- Managing complex, multi-turn conversations with consistent context.

- Creating in-depth analyses of visual data combined with text.

For example, legal professionals can use Qwen2-VL to analyze contracts with associated visual exhibits, generating summaries and insights in a single output.

1.4. Multilingual Support

Qwen2-VL’s support for over 29 languages makes it a globally applicable tool. It excels in:

- Translating multilingual text in multimodal contexts.

- Adapting visual content to local languages and cultures.

- Providing accessibility for non-English-speaking users.

This capability enables businesses to cater to diverse markets effectively, offering localized solutions powered by AI.

2. Qwen2-VL vs. Other Vision-Language Models

Vision-language models like OpenAI’s GPT-4 Vision and Google’s Flamingo have dominated the AI landscape. However, Qwen2-VL brings unique capabilities that set it apart.

| Feature | Qwen2-VL | GPT-4 Vision | Google Flamingo |

|---|---|---|---|

| Dynamic Resolution | Yes | Limited | Limited |

| Long-Context Support | Up to 128,000 tokens | ~32,000 tokens | ~16,000 tokens |

| Multilingual Support | 29+ languages | Moderate | Limited |

| Visual Tokenization | Advanced and flexible | Strong | Moderate |

How Qwen2-VL Stands Out

Dynamic Visual Processing: Qwen2-VL processes varying resolutions effortlessly, making it more versatile in real-world scenarios.

Extended Context Handling: Its ability to process up to 128,000 tokens provides a significant advantage in document-heavy and long-term conversational tasks.

Broad Linguistic Reach: With support for over 29 languages, Qwen2-VL delivers unparalleled multilingual functionality, making it ideal for global applications.

3. Real-World Applications of Qwen2-VL

The versatility of Qwen2-VL enables its use across a range of industries and scenarios. Here are some of its most impactful applications:

3.1. E-Commerce

Qwen2-VL’s capabilities open up transformative opportunities across a variety of industries, making it an essential tool for innovation and efficiency. In the e-commerce sector, Qwen2-VL enhances user experience by enabling visual search and personalized recommendations. Customers can upload product images, and the model analyzes them to identify similar items or provide suggestions tailored to their preferences. Its ability to process multilingual product descriptions and generate marketing content further streamlines operations, allowing businesses to reach global markets effectively.

For marketers, Qwen2-VL integrates well with AI tools for marketing to automate campaigns and analyze customer data. It pairs effectively with AI tools like ChatGPT to power multilingual chatbots, improving customer support experiences.

Content creation also benefits from pairing Qwen2-VL with tools like a paraphrase AI tool or AI tools for writing to produce engaging product descriptions and marketing copy. When combined with a free AI image generator, Qwen2-VL ensures visually rich catalogs that resonate with customers.

For those seeking comprehensive solutions, exploring the best AI tools alongside Qwen2-VL can elevate any e-commerce strategy, driving efficiency and customer satisfaction.

3.2. Education

In education, Qwen2-VL proves invaluable for creating engaging and interactive learning materials. Its advanced vision-language processing helps explain complex topics with rich visual aids, making them accessible to learners of all ages. Teachers and institutions can also use the model to automate grading for assignments that include diagrams, handwritten text, or visual components. By translating educational resources into multiple languages, Qwen2-VL ensures inclusivity and accessibility for students worldwide.

Educators can also pair Qwen2-VL with AI tools for teachers to automate repetitive tasks such as grading and lesson planning. By leveraging these tools, teachers save time and focus more on student interaction and tailored support.

For summarizing lengthy academic materials, Qwen2-VL works seamlessly with a summarize AI tool to condense articles, research papers, or video content into digestible formats. This makes it easier for both teachers and students to handle extensive educational resources efficiently.

Students also benefit from Qwen2-VL’s ability to personalize learning. Its multilingual support ensures accessibility to resources in different languages, while integration with AI tools for students helps them tackle assignments, prepare for exams, and even improve their writing skills. By combining these tools with Qwen2-VL, students can enhance their academic performance and develop independent learning habits.

3.3. Healthcare

Healthcare is another sector benefiting from Qwen2-VL’s robust features. Medical professionals can leverage its multimodal capabilities to analyze diagnostic images, such as X-rays and MRIs, alongside textual patient records, enabling more accurate diagnoses and treatment planning. In telemedicine, the model facilitates remote consultations by processing visual data and multilingual text inputs, breaking down barriers in global healthcare accessibility. Researchers in the pharmaceutical industry can use Qwen2-VL to analyze complex datasets and scientific literature, accelerating drug discovery and innovation.

Qwen2-VL’s versatility extends to business intelligence as well. It excels in processing large datasets, extracting meaningful insights, and generating structured reports that combine text and visuals. Whether in market analysis, strategic planning, or operational efficiency, businesses can rely on Qwen2-VL to provide actionable intelligence, helping them stay ahead in competitive environments. Its ability to adapt to varied tasks and industries makes it a cornerstone for innovation across the board.

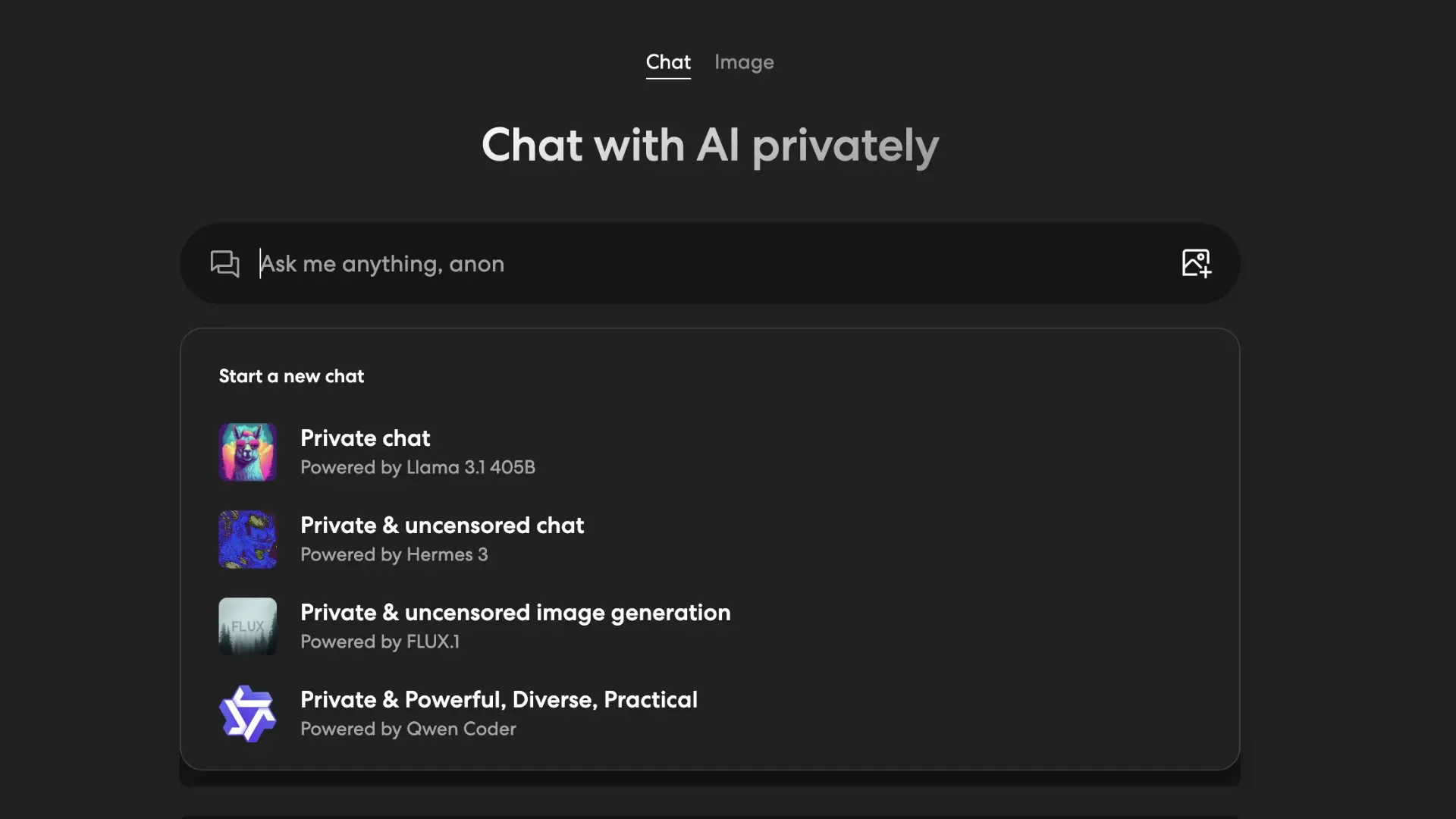

4. AnonAI with Qwen2.5 Agent

The integration of Qwen2.5 Agent with AnonAI redefines how privacy and AI intersect, offering users an unparalleled experience in secure and anonymous interactions. Designed to prioritize confidentiality, AnonAI ensures that user data remains protected while delivering the power of advanced AI technologies.

AnonAI stands out as a leading platform for private AI applications. With no accounts, no tracking, and end-to-end encryption, it ensures that all user conversations and activities remain entirely confidential. This makes it an ideal solution for businesses handling sensitive data and individuals seeking privacy in their AI interactions.

One of AnonAI’s most innovative features is its integration of private AI chat. Users can engage in seamless conversations with expert AI agents without worrying about their data being logged or shared. These agents can assist in tasks like creative writing, business strategy, and even personal guidance while maintaining absolute anonymity.

For users who require visual AI capabilities, AnonAI offers compatibility with a private AI image generator, enabling the creation of secure, high-quality images for professional or personal use. Combined with Qwen2.5 Agent’s robust multimodal capabilities, this feature enhances creative workflows while preserving privacy.

AnonAI also promotes secure and engaging communication through its implementation of safest chat rooms. These rooms allow users to collaborate, share ideas, or simply interact with like-minded individuals in a safe, encrypted environment.

5. FAQs

What is Qwen-VL?

Qwen-VL is a vision-language model developed by Alibaba’s Qwen Team. It combines text and image understanding to handle multimodal tasks such as image captioning, visual question answering, and generating narratives from visual content. It’s ideal for applications in e-commerce, media, and interactive AI systems.

What is Qwen-VL-Max?

Qwen-VL-Max is an advanced version of the Qwen-VL model, designed to handle tasks requiring longer context and higher-resolution images. It offers improved multimodal comprehension, making it suitable for complex applications like medical imaging analysis, research, and large-scale data processing.

What is Qwen-Max?

Qwen-Max is a term used to describe the top-tier capabilities of Qwen models, combining long-context support, advanced multimodal processing, and multilingual expertise. It represents the pinnacle of Qwen’s AI capabilities, addressing sophisticated use cases in business, healthcare, and creative industries.

What is Qwen 72B Chat?

Qwen 72B Chat is the instruction-tuned version of the Qwen 2.5 - 72B model, optimized for conversational tasks. With 72 billion parameters, it provides:

- Accurate and context-aware dialogue responses.

- Multilingual support for seamless global interactions.

- Role-based conversations for virtual assistants and chatbots.

What is Qwen 7B Chat?

Qwen 7B Chat is a lightweight conversational model with 7 billion parameters. It offers efficient, responsive dialogue capabilities while maintaining strong contextual understanding, making it ideal for chatbots and virtual assistants in resource-constrained environments.

What is Qwen-VLLM?

Qwen-VLLM refers to the Qwen model series models optimized as vision-language large language models. These models excel in handling both text and image inputs for multimodal applications, enabling tasks such as:

- Image-based question answering.

- Generating visually descriptive text.

- Multimodal content creation for diverse industries.

Conclusion

The combination of Qwen2-VL’s multimodal advancements and AnonAI’s privacy-focused platform showcases the future of AI—intelligent, versatile, and secure. Whether you’re leveraging Qwen2-VL for e-commerce, healthcare, or creative endeavors, or exploring the personalized capabilities of AnonAI with Qwen2.5 Agent, these innovations redefine the possibilities of AI in everyday life.

As AI continues to evolve, platforms like Qwen2-VL and AnonAI exemplify how cutting-edge technology can enhance human creativity and problem-solving while respecting user privacy.

Get exclusive rewards

for your first Autonomous blog subscription.

Spread the word

You May Also Like

-7512dd9e-3510-42ed-92df-b8d735ea14ce.svg)